avery

Wetware Developer

So I co-founded a hackerspace with 2 friends about a year ago, which has only really been operational for about 5(?) months. Things are somewhat stable; we’re covering about 2/3 of our operating costs already, so we feel comfortable calling it a success. I’m gonna try to distill the early wisdom before I lose the perspective.

Too Many Cooks: The Hackerspace Volunteer Contribution Model

The demographic that frequents a hackerspace is financially and emotionally invested in tech. Contributors are very enthusiastic about contributing1, but flakey. Volunteer output ebbs and flows according to passion, on-call status, the job market, etc.; we are the first chore that gets dropped when you’re burnt out. The first thing to understand about coordinating nerds to bootstrap infrastructure for free is that we aren’t remotely consistent.

When we dumped our first 2u on the floor of the server room, before we even had racks, we had Proxmox. It’s a simple hypervisor, and most of our services are still guests on that 2u; while I’m going to speak at length about its many flaws, I don’t think starting with Proxmox was a mistake.

Before we had settled on responsibilities, over the course of 2 nights, one of our members:

- setup Proxmox

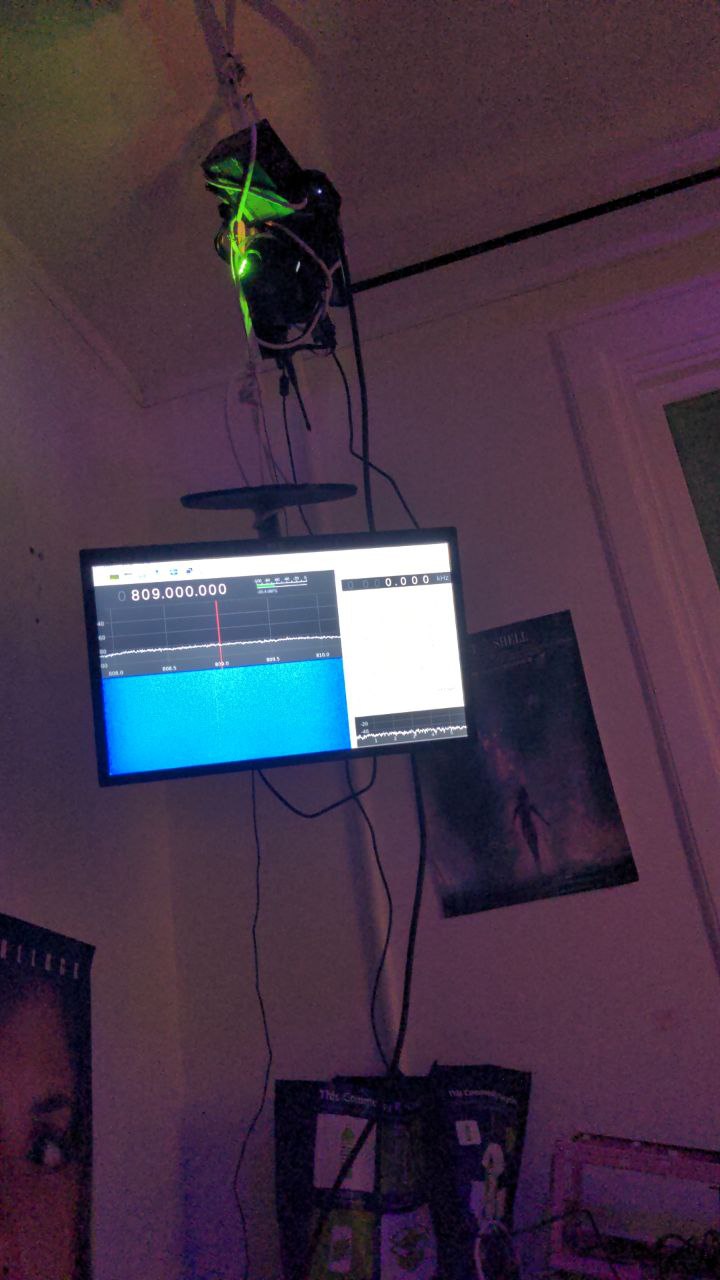

- hacked together a door opener2 with a telegram bot and a relay

- configured an IoT network to house said relay

- disappeared without documenting anything, and hasn’t returned

A microcosm. Especially during the first few months, where we met sporadically to vivify a hastily evacuated3 WeWork hub. I will spare you the tedium of leasing, furniture acquisition4, and access control; nothing technological was occurring for the first 3 months, and by then, we’d learned the heat was broken.

How I accidentally got put in charge of infrastructure

Devhack resembled a sort of commercial freezer during the first winter, and rewilding the 50-degree sterile office environment was my first charge. Of the 3 of us, I alone expressed aesthetic concerns, and being the least technological, I was the obvious choice to theme and layout the space5.

This didn’t last. I made obvious decisions about space usage, themed rooms, contracted a tiered-couch platform…but I was also the only underemployee; I had the most free time.

We’d host an open hack night, and the other two would have obligations. A was on call, B was taking a mental health break from tech, A was working late, B went on an extended European burnout-vacation, etc.; I was the only consistent event host. As people settled into their roles, the need for a dedicated event host dissolved6, but every Thursday from about 5pm-1am, I took care of the space.

Being consistent and a co-founder made me the point-of-contact for authorization. Most members would assume I knew everything about our projects7. I’m not some savant; I just had like 20 hours a week to complete tasks like “give the printer a CUPS container we can send files to so we don’t need drivers on each machine” or “find out why the nodes can’t talk to each other” or “proxmox HA” or “make auth happen”. I failed to complete about half of these tasks in a reasonable timeframe.

Anarchy, State, and Utopia

Volunteer churn resulted in, best-case, a lot of half-finished and duplicated work. Half a dozen people with hypervisor admin kept stepping on each other, making downtime. Downtime fosters a desperation, a frustration, and an arrogance about the current infra; more downtime means more breaking means more downtime. Mediawiki documentation stopgaps the problem, but service interdependency propagates downtime.

We debated, for several months, what would replace our hell. I decided we needed declarative IaC wherever possible. In lieu of a test env, being able to regenerate infrastructure off a git repo and a shell script would at least spare us extended downtime with the nuclear option. Aforementioned employee #1’s European vacation left devhack’s infra design to me, the sysadmin with no experience. I asked the half-dozen homelab enthusiasts for advice and got as many different answers, so I spent awhile reading about bare-metal devops stacks on reddit. Ultimately, two things became clear to me:

- Kubernetes, being (mostly) declarative, scalable, over-engineered, and popular, is the perfect choice for enthusiasts with colocation+hosting ambitions

- Whatever kubernetes is built on top of needs to be sufficiently declarative to rebuild everything from a git repo

One of the professionals had a Terraform -> Kubernetes stack that he used for everything and was willing to teach me, so that was an easy decision. Unfortunately, he moved after 3 weeks, so he taught what he could. Chuie got me started declaratively and taught me some best practices (thanks <3).

I got overzealous with the declaration, and I wanted to declare the hypervisor layer (mostly out of curiosity). To generate images I thought I’d use Packer; same language, nice interoperability. I lost a week trying to wrangle Packer and Terraform on our servers. These tools are made for cloud infrastructure. People suggested Chef, Ansible, MaaS…I wasn’t going to add more complexity. Our images are made by fetching the latest Debian netinst iso and repacking it with my script8 to bundle a Debian preseed.cfg for unattended installation. Then, a Terraform9 provisioner takes the installation and customizes it per-machine. Good enough; I’ll trade Debian lock-in10 for simplicity11.

It took three months or so to get the cluster to an acceptable state. I must thank Finn for picking me up where I stalled (cluster networking) and guiding us toward best-practices. Our biggest delays:

- Renumbering the network about halfway through

- Multiple switch configuration editors causing cluster inter-VLAN routing failures

- The wiki dying after migrating its VM because Proxmox does not play well with NixOS

The cluster is at least fully operational now. There’s a lot of k8s-specific boilerplate, which we don’t think we can protect our members from. They don’t have to interact with Terraform. Good enough for now.

particularly in the greenfield stage ↩︎

the devhack door has become a sort of biblical horror, perhaps deserving of its own blogpost. ↩︎

the plants were left to die of thirst, the utility closet wasn’t traversable, the heat didn’t work, etc. ↩︎

most of our stuff came from the UW surplus store, friends, and their trucks. Hard to beat $5 whiteboards ↩︎

and in theory, learn by osmosis. ↩︎

I’m writing this from ToorCamp with the other 2. Tomorrow will be the first time one of us has not run open hack night. ↩︎

This is a great way to develop Imposter Syndrome, by the way ↩︎

This is a surprisingly complicated process. Distro installation images are usually read-only, and you have to supply initrd + kernel commandline options, and debugging requires doing this in a VM… ↩︎

Hashicorp closed-sourced Terraform, so I moved us to OpenTofu, which is the same, for now. ↩︎

Devhack tradition dictates one must take a shot every time one installs debian. One of our members built an eternal debian installation. It has broken one hard drive and one cd drive thus far. ↩︎

I’m not even going to use this now that I know enough NixOS internals ↩︎